I have been recently confronted with a dilemma.

If I was given a fresh start at building a secure DMZ environment, could I justify the cost of adding Web Application Firewalls into my DMZ? Would they adequately add to the Risk Reduction?

A WAF or (Web Application Firewall), sometimes called a "Layer 7 firewall" implements protocol and application inspection for HTTP(S) traffic. There are two primary categories of application firewalls, network-based application firewalls and host-based application firewalls.

A traditional firewall works on "Layer 3" and simply follows rules, allowing or denying "Source Address", "Destination Address", and "TCP/UDP Port" combinations. Most current firewalls implement "Stateful Inspection" of traffic at that layer, but that simply ensures that the TCP protocol rules are followed for network traffic across the firewall. Any traffic transferred via HTTP or HTTPS is simply allowed to pass if it follows the simple source/destination/port rules in the firewall.

This ability to communicate with a web server unhindered or un-inspected, coupled with software vulnerabilities and poor coding practices, has been one of the primary vehicles for Data Breaches, Denial of Service attacks, and malicious Site Tampering.

By inspecting a web page's forms, and manipulating the return communications, a malicious attacker could add or inject information that causes the site to not behave as designed. This could have the undesired effect of dumping all the data available in your website's backend database, or potentially allowing the attacker to take control of your site and or Webserver.

So..... What can a WAF do that traditional a traditional firewall / Intrusion prevention cannot?

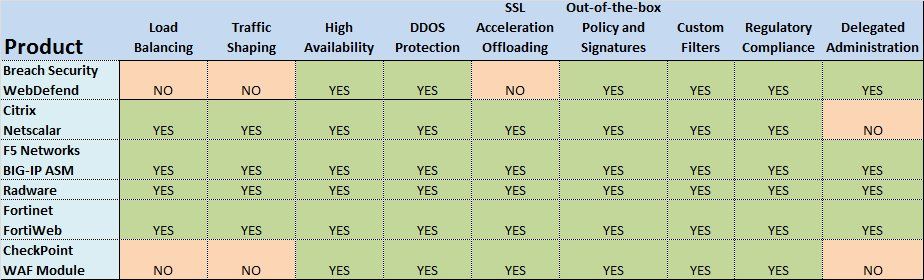

Over the past decade, the industry has come up with standards for Web Application Firewall Evaluation Criteria (WAFEC) to allow us end users and security practitioners to be able to compare and select an appropriate product.

With me so far?

Sounds like a perfectly reasonable infrastructure to add to your DMZ security arsenal.

However.... When the biggest players were put to the test here, it was demonstrated that a well tuned IPS system can be as or more effective than a Web Application Firewall. In addition, by adding Dynamic Application Security tools to you Software Development Lifecycle, you could significantly increase the effectiveness of either WAF or IPS.

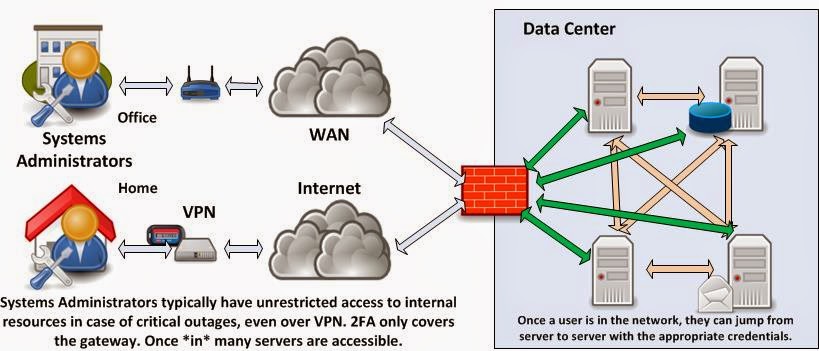

If you've been following my Blog to date, you know that I'm a big proponent of "Lock down access to your servers and data on the system itself! Keep your Data Security as close to the data as possible. "

That said....

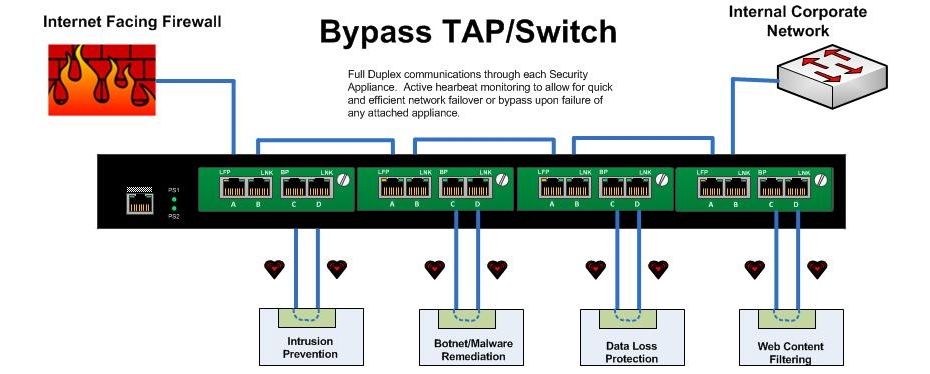

If your DMZ has a healthy mix of Layer-3 firewall up front, with a "Least Access" rule base, a decent IPS infrastructure INLINE, you subscribe to vulnerability testing and remediation and Software Development Lifecycle practices, then you are at least as secure as any commercial WAF solution.

If you add local host protection to your DMZ servers and tune it specifically for the Business Purpose of that asset, you will have well exceeded any benefit derived from implementing WAF.

References:

OWASP: Why WAFs fail

Web Application Security Consortium

Web Security Glossary

ICSALabs: Importance of Web Application Firewall Technology

https://www.icsalabs.com/products?tid%5B%5D=4227

Analyst Report: Analyzing the Effectiveness of Web Application Firewalls

Advanced Persistent Threats get more advanced, persistent and threatening

Beyond Heuristics: Learning to Classify Vulnerabilities and Predict Exploits

Network Traffic Anomaly Detection and Evaluation

Gartner: Magic Quadrant for Dynamic Application Security Testing

Top 5 best practices for firewall administrators

SANS: IPS Deployment Strategies

If I was given a fresh start at building a secure DMZ environment, could I justify the cost of adding Web Application Firewalls into my DMZ? Would they adequately add to the Risk Reduction?

A WAF or (Web Application Firewall), sometimes called a "Layer 7 firewall" implements protocol and application inspection for HTTP(S) traffic. There are two primary categories of application firewalls, network-based application firewalls and host-based application firewalls.

A traditional firewall works on "Layer 3" and simply follows rules, allowing or denying "Source Address", "Destination Address", and "TCP/UDP Port" combinations. Most current firewalls implement "Stateful Inspection" of traffic at that layer, but that simply ensures that the TCP protocol rules are followed for network traffic across the firewall. Any traffic transferred via HTTP or HTTPS is simply allowed to pass if it follows the simple source/destination/port rules in the firewall.

This ability to communicate with a web server unhindered or un-inspected, coupled with software vulnerabilities and poor coding practices, has been one of the primary vehicles for Data Breaches, Denial of Service attacks, and malicious Site Tampering.

By inspecting a web page's forms, and manipulating the return communications, a malicious attacker could add or inject information that causes the site to not behave as designed. This could have the undesired effect of dumping all the data available in your website's backend database, or potentially allowing the attacker to take control of your site and or Webserver.

So..... What can a WAF do that traditional a traditional firewall / Intrusion prevention cannot?

- All current WAF vendors provide Out-Of-The-Box Policies and Signatures for various known attacks and exploits such as SQL injection, session hijacking, cross-site scripting (XSS),cross-site request forgeries (CSRF),buffer overflows, cookie tampering, remote code execution,malware, denial of service, and brute force authentication

- All current WAF vendors provide Out-Of-The-Box Reporting and Alerting templates for Regulatory Compliance (PCI, Sarbanes Oxley,HIPAA, etc...)

- All current WAF vendors provide a Heuristic Learning mode that will create a "traffic baseline" over a short period of time, and then allow you to deny any traffic not following that pattern.

Over the past decade, the industry has come up with standards for Web Application Firewall Evaluation Criteria (WAFEC) to allow us end users and security practitioners to be able to compare and select an appropriate product.

With me so far?

Sounds like a perfectly reasonable infrastructure to add to your DMZ security arsenal.

However.... When the biggest players were put to the test here, it was demonstrated that a well tuned IPS system can be as or more effective than a Web Application Firewall. In addition, by adding Dynamic Application Security tools to you Software Development Lifecycle, you could significantly increase the effectiveness of either WAF or IPS.

Automatically generated filters from dynamic application security tools (DAST) can improve vulnerability blocking effectiveness by as much as 39% for a WAF and as much as 66% on an IPS.

If you've been following my Blog to date, you know that I'm a big proponent of "Lock down access to your servers and data on the system itself! Keep your Data Security as close to the data as possible. "

That said....

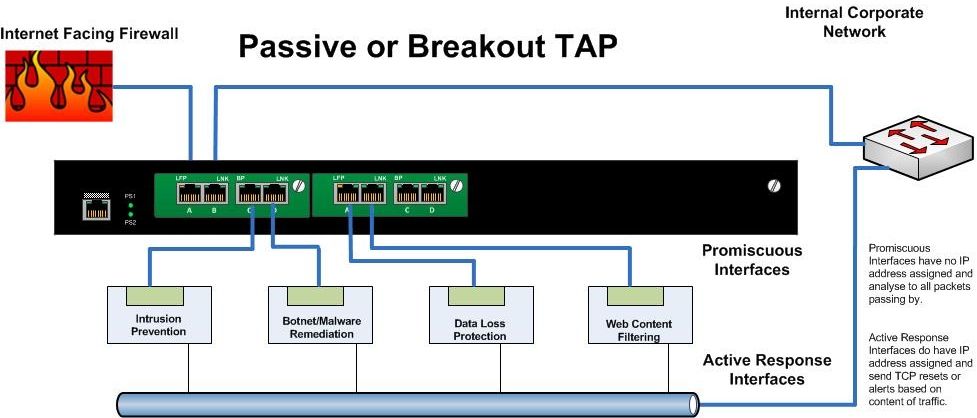

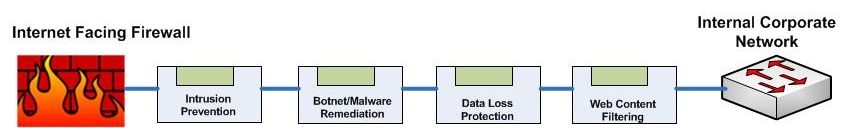

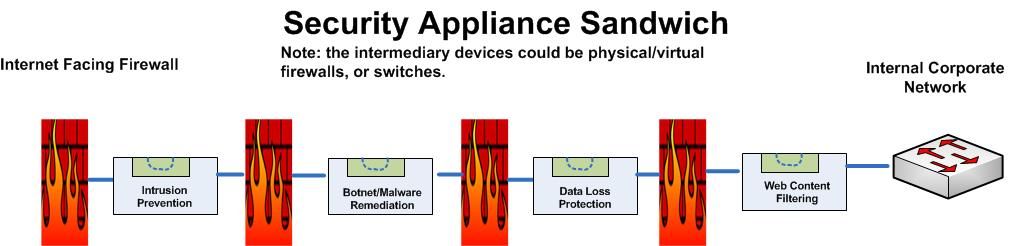

If your DMZ has a healthy mix of Layer-3 firewall up front, with a "Least Access" rule base, a decent IPS infrastructure INLINE, you subscribe to vulnerability testing and remediation and Software Development Lifecycle practices, then you are at least as secure as any commercial WAF solution.

If you add local host protection to your DMZ servers and tune it specifically for the Business Purpose of that asset, you will have well exceeded any benefit derived from implementing WAF.

References:

OWASP: Why WAFs fail

Web Application Security Consortium

Web Security Glossary

ICSALabs: Importance of Web Application Firewall Technology

https://www.icsalabs.com/products?tid%5B%5D=4227

Analyst Report: Analyzing the Effectiveness of Web Application Firewalls

Advanced Persistent Threats get more advanced, persistent and threatening

Beyond Heuristics: Learning to Classify Vulnerabilities and Predict Exploits

Network Traffic Anomaly Detection and Evaluation

Gartner: Magic Quadrant for Dynamic Application Security Testing

Top 5 best practices for firewall administrators

SANS: IPS Deployment Strategies